The rise of automated teaching technologies

We need to talk about robots.

Specifically, we need to talk about the new generation of AI-driven teaching technologies now entering our schools. These include various ‘autonomous interactive robots’ developed for classroom use in Japan, Taiwan and South Korea. Alongside these physical robots, are the software-based ‘pedagogical agents’ that now provide millions of students withbespoke advice, support and guidance about their learning. Also popular are ‘recommender’ platforms, intelligent tutoring systems and other AI-driven adaptive tutoring – all designed to provide students with personalised planning, tracking, feedback and ‘nudges’. Capturing thousands of data-points for each of its students on a daily basis, vendors such as Knewton can now make a plausible claim to know more about any individual’s learning than their ‘real-life’ teacher ever could.

One of the obvious challenges thrown up by these innovations is the altered role of the human teacher. Such technologies are usually justified as a source of support for teachers, delivering insights that “will empower teachers to decide how best to marshal the various resources at their disposal”. Indeed, these systems, platforms and agents are designed to give learners their undivided attention, spending indefinitely more time interacting with an individual than a human teacher would be able. As a result, it is argued that these technologies can provide classroom teachers with detailed performance indictors and specific insights about their students. AI-driven technology can therefore direct teachers’ attention toward the most needy groups of students – acting as an ‘early warning system’ by pointing out students in most need of personal attention.

On one hand, this might sound like welcome assistance for over-worked teachers. After all, who would not welcome an extra pair of eyes and expert second opinion? Yet rearranging classroom dynamics along these lines prompt a number of questions about the ethics, values and morals of allowing decisions to be made by machines rather than humans. As has been made evident by recent AI-related controversies in healthcare, criminal justice and national elections, the algorithms that power these technologies are not neutral value-free confections. Any algorithm is the result of somebody deciding on a set of complex coded instructions and protocols to be repeatedly followed. Yet in an era of proprietary platforms and impenetrable coding, this logic typically remains imperceptible to most non-specialists. This is why non-specialist commentators sometimes apply the euphemism of ‘secret sauce’ when talking about the algorithms that drive popular search engines, news feeds and content recommendations. Something in these coded recipes seems to hit the spot, but only very few people are ‘in the know’ over the exact nature of these calculations.

This brings us to a crucial point in any consideration of how AI should be used in education.

If implementing an automated system entails following someone else’s logic then, by extension, this also means being subject to their values and politics.

Even the most innocuous logic of [IF X THEN Y] is not a neutral, value-free calculation. Any programmed action along these lines is based on pre-determined understandings of what X and Y is, and what their relation to each other might be. These understandings are shaped by the ideas, ideals and intentions of programmers, as well as the cultures and contexts that these programmers are situated within. So key questions to ask of any AI-driven teaching system include who is now being trusted to program the teaching? Most importantly, what are their values and ideas about education? In implementing any technological system, what choices and decisions are now being pre-programmed into our classrooms?

The ethical dilemma of robot teachers

The complexity of attempting to construct a computational model of any classroom context is echoed in the ‘Ethical Dilemma of the Self-Driving Car’. This test updates a 1960s’ thought experiment known as ‘the Trolley Dilemma’ which posed a simple question: would you deliberately divert a runaway tram to kill one person rather than the five unsuspecting people it is currently hurtling toward? The updated test – popularised by MIT’s ‘Moral Machine’ project – explores human perspectives on the moral judgements made by the machine intelligence underpinning self-driving cars. These hypothetic scenarios involve a self-driving car that is imminently going to crash through a pedestrian crossing. The car can decide to carry on the same side of the road or veer onto an adjacent lane and plough into a different group of pedestrians. Sometimes another option allows the car to self-abort by deciding to swerve into a barrier and sacrifice its passengers.

Unsurprisingly, this third option is very rarely selected by respondents. Few people seem prepared to ride in a driverless car that is programmed to value the lives of others above their own. Instead, people usually prefer to choose one group of bystanders over the other. Contrasting choices in the test might include hitting a homeless man as opposed to a pregnant woman, an overweight teenager or a healthy older couple. These scenarios are complicated further by considering which of these pedestrians is crossing on a green light or jaywalking. These are extreme scenarios, yet neatly illustrate the value-laden nature of any ‘autonomous’ decision. Every machine-based action has consequences and side-effects for sets of ‘users’ and ‘non-users’ alike. Some people gets to benefit from automated decision-making more than others, even when the dilemma relates to more mundane decisions implicit in the day-to-day life of the classroom.

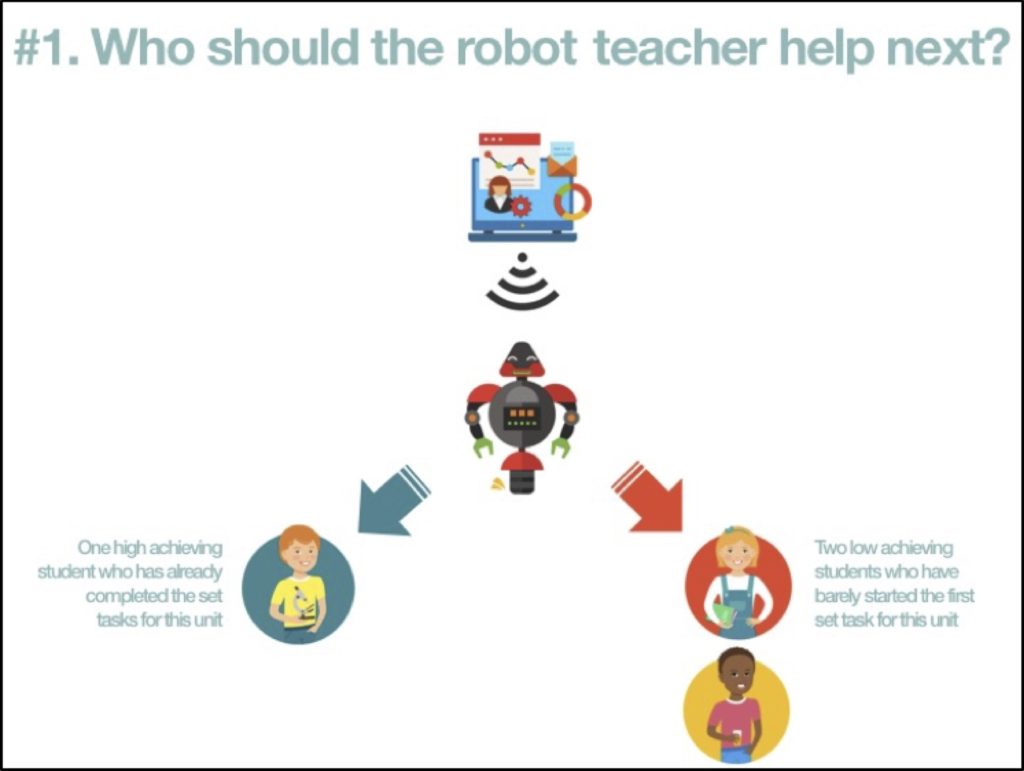

So what might an educational equivalent of this dilemma be? What might the ‘Ethical Dilemma of the Robot Teacher’ look like? Here we might imagine a number of scenarios addressing the question: ‘Which students does the automated system direct the classroom teacher to help?’. For example,

who does the automated system tell the teacher to help first – the struggling girl who rarely attends school and is predicted to fail, or a high-flying ‘top of the class’ boy?

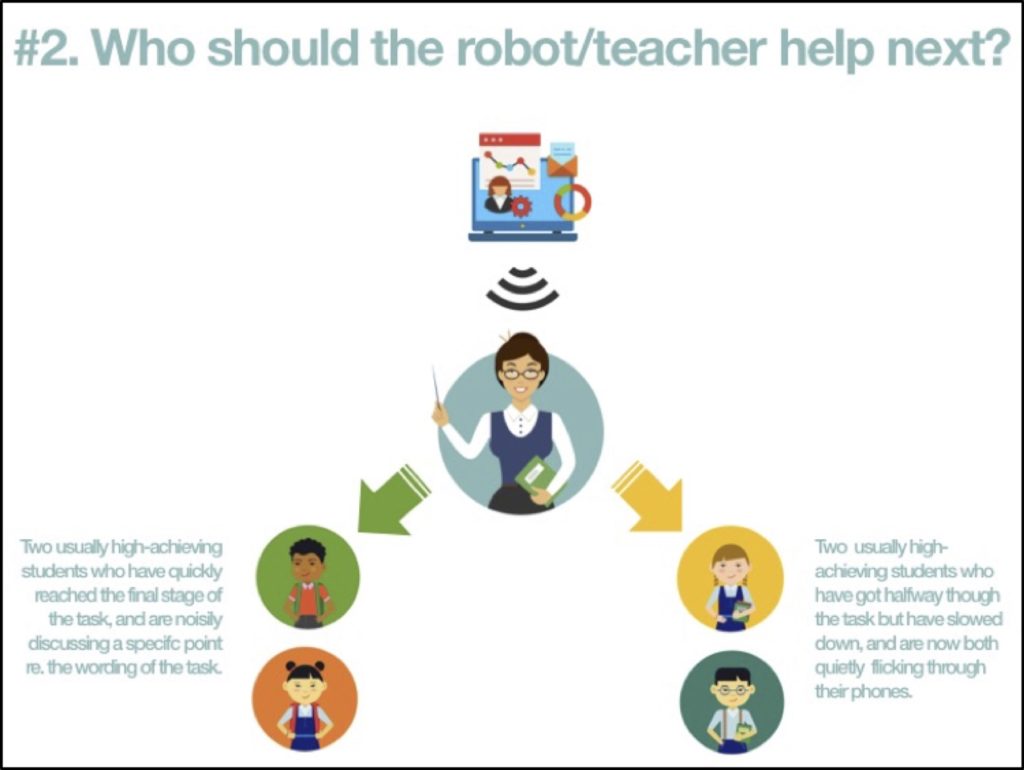

Alternately, what logic should lie behind deciding whether to direct the teacher toward a group of students who are clearly coasting on a particular task, or else a solitary student who seems to be excelling. What if this latter student is in floods of tears? Perhaps there needs to be a third option focused on the well-being of the teacher. For example, what if the teacher decides to ignore her students for once, and instead grab a moment to summon some extra energy?

The limits of automated calculations in education

Even these over-simplified scenarios involve deceptively challenging choices, quickly pointing to the complexity of classroom work. Tellingly, most teachers quickly get frustrated when asked to engage in educational versions of the dilemma. Teachers complain that these scenarios seem insultingly simplistic. There are a range of other factors that one needs to know in order to make an informed decision. These might include students’ personalities and home lives, the sort of day that everyone has had so far, the nature of the learning task, the time of academic year, assessment priorities, and so on. In short, teachers quickly complain that their working lives are not this black-and-white, and that their professional decisions are actually based on a wealth of considerations.

This ethical dilemma is a good illustration of the skills and sensitivities that human teachers bring to the classroom setting. Conversely, all the factors that are not included in the dilemma point to the complexity of devising algorithms that might be considered appropriate for a real-life classroom. Of course, many system developers consider themselves well-capable of being able to provide sufficient measurement of thousands (if not millions) of different data-points to capture this complexity. Yet such confidence of quantification quickly diminishes in light of the intangible, ephemeral factors that teachers will often insist should be included in these hypothetical dilemmas. The specific student that a teacher opts to help at any one moment in a classroom can be a split-decision based on intuition, broader contextual knowledge about the individual, as well as a general ‘feel’ for what is going on in the class. There can be a host of counter-intuitive factors that prompt a teacher to go with their gut-feeling rather than what is considered to be professional ‘best practice’.

So, how much of this is it possible (let alone preferable) to attempt to measure and feed into any automated teaching process? A human teacher’s decision to act (or not) is based on professional knowledge and experience, as well as personal empathy and social awareness. Much of this might be intangible, unexplainable and spur-of-the-moment, leaving good teachers trusting their own judgement over what a training manual might suggest that they are ‘supposed’ to do. The ‘dilemmas’ just outlined reflect situations that any human teacher will encounter hundreds of time each day, with each response dependent on the nature of the immediate situation. What other teachers ‘should do’ in similar predicaments is unlikely to be something that can be written down, let alone codified into a set of rules for teaching technologies to follow. What a teacher decides to do in a classroom is often a matter of conscience rather than a matter of computation. These are very significant but incredibly difficult issues to be attempting to ‘engineer’. Developers of AI-driven education need to tread with care. Moreover, teachers need to be more confident in telling technologists what their products are not capable of doing.

The two ‘dilemma’ images were illustrated using graphics designed by Katemangostar / Freepik

Neil Selwyn

Neil Selwyn is a Professor in the Faculty of Education, Monash University and previously Guest Professor at the University of Gothenburg. Neil’s research and teaching focuses on the place of digital media in everyday life, and the sociology of technology (non)use in educational settings.

@neil_selwyn is currently writing a book on the topic of robots, AI and the automation of teaching. Over the next six months he will be posting writing on the topic in various education blogs … hopefully resulting in: Selwyn, N. (2019) Should Robots Replace Teachers? Cambridge, Polity

The difference from the 60s where we had programmed education, is the tech to collect data of the students’ activities and present the outcome for the teacher. The tech do it very well compared with yesterday.

I also suspect we have a society where teacher assistant robots are more accepted in classroom. All the way through my work doing this comment a ”robot” has assisted me and suggested words while writing