Sofia Serholt works in the Computer Science & Engineering department at Chalmers University of Technology and is a member of the LIT community in Gothenburg. She is one of the leading European researchers in the area of robots and education. In this interview, Sofia talks with Neil Selwyn about her recent work, and the growing interest being shown in AI and education.

NEIL: So, first off, for the uninitiated, exactly how are robots being used in schools? What sort of technologies are we talking about here?

SOFIA: A big issue in education right now is programming robots – using robots as tools to learn different programming languages. However, my research is mostly focused on robots that actually teach children things and/or learn from them, and perhaps interact with them socially. So, these are humanoid robots that are similar to humans in different ways. Then, we also see robots that can be remotely controlled in classrooms – like Skype on Wheels – and these can be used by children who can’t be in the classroom for different reasons such as if they’re currently undergoing cancer treatment. We have some very rare situations where teachers have remotely controlled robots to be able to teach a class as well. So, that’s basically how the field is right now.

NEIL: Now, this all sounds really kind of futuristic and interesting in theory, but in practice, one of the main issues is how people actually accept these machines, and how they gain trust in them. What have you found out about people’s reactions to these technologies in the classroom?

SOFIA: When it comes to the research that I’ve done in the classroom I have put actual robots in the classroom for several months and studied interactions with them. However, I haven’t done any rigorous research about acceptance in those cases. However, I do see that children are generally optimistic and positive about it … outwardly anyway. And teachers, of course, appreciate having a researcher there and being part of a project, and it’s fun and interesting. But, teachers haven’t been very involved in the actual robot and the interactions. Instead it’s been on the sidelines of what they’re doing. When I talk to teachers and students that are not part of any study, I can see that students are concerned about certain things that robots can do or what they shouldn’t do. And, the privacy issue is one thing that they are very concerned about. For example, they don’t want to be recorded by a robot, which might be necessary for it to be able to interpret different things about the person it’s interacting with. Also children don’t want to be graded by a robot. And, this is something that also teachers resonate with, so they don’t want to give away their authority in that sense. So, there are a lot of open questions right now – for example, how this technology might impact children in the long run. We don’t know very much about this. We know about our children have toys and grow up with toys. But these robots are a new thing – kind of like a social interaction partner that is not really human, but does kind of mirror human behaviour. And also, it has certain restrictions, like it might not be able to speak with a humanlike intonation. So how does that affect children if they were to grow up with these robots? In reality that’s a kind of study we can’t conduct.

NEIL: So, can you just tell us a bit about the research you’ve done yourself on robots in the classroom?

SOFIA: I’ve looked at interaction breakdowns. In one study I selected situations or instances where children either became notably upset or they became inactive when interacting with the robot. Not because they were bored with the actual game … that didn’t actually happen because it was very engaging and fun. But these were instances when students couldn’t do anything – they couldn’t proceed – and also when they started doing other stuff in the room, or began to talk to their friends instead of working on the topic. And, what I saw was that when the robot doesn’t understand what the child is saying, it generates a situation where on the one hand you have a robot who can express a lot of stuff and tell you what to do. But, if the child can’t ask the robot a question or show uncertainty, it creates a very difficult situation when they’re alone with this robot. And this is what leads to these breakdowns – when students need help from the outside. I looked at six randomly picked students over the course of the three-and-a-half months that I was in the school. And out of those sessions, I spotted 41 breakdowns. Some children were very upset about not understanding what to do, and not being able to move on. Some got really angry at the robot for disrupting and destroying their strategy that they were using. But, I think the worst case was when the students who’ve actually felt that they were not good enough and they put the blame on themselves. You know, because robots and computers have a lot of authority in the sense. You know, you don’t think that your calculator lies to you?

NEIL: Yeah, yeah.

SOFIA: You trust in your calculator more than your own calculations. And, I spotted this similar tendency here. If the robot broke down when I was with it then it must not like me. And, having to deal with those kinds of situation is ethically problematic, I think.

NEIL: So, is that a design problem? Can we design robots to suddenly be a bit more imprecise, or as you say, not to kind of break down the magic between the student and the machine?

SOFIA: I think we could have obviously accomplished a lot more than what we did in that study. We used a teaching robot and there were a lot of outside things that affected it. You know, sunlight affects it, heat in the room affects it, how long it’s been going on affects the ability of the robot to work like it should. But, nevertheless we have to kind of ask ourselves how far along that road can we go to uphold this illusion? The illusion that this is a sentient being in the eyes of the student. I think that’s a question for philosophy and ethics really.

NEIL: So, you’ve – you’ve moved very quickly from looking at these things as teaching and learning technologies to ethical questions. These are big kind of issues to be grappling with. So, what are the main ethical questions that we need to be asked here? We’ve got privacy …

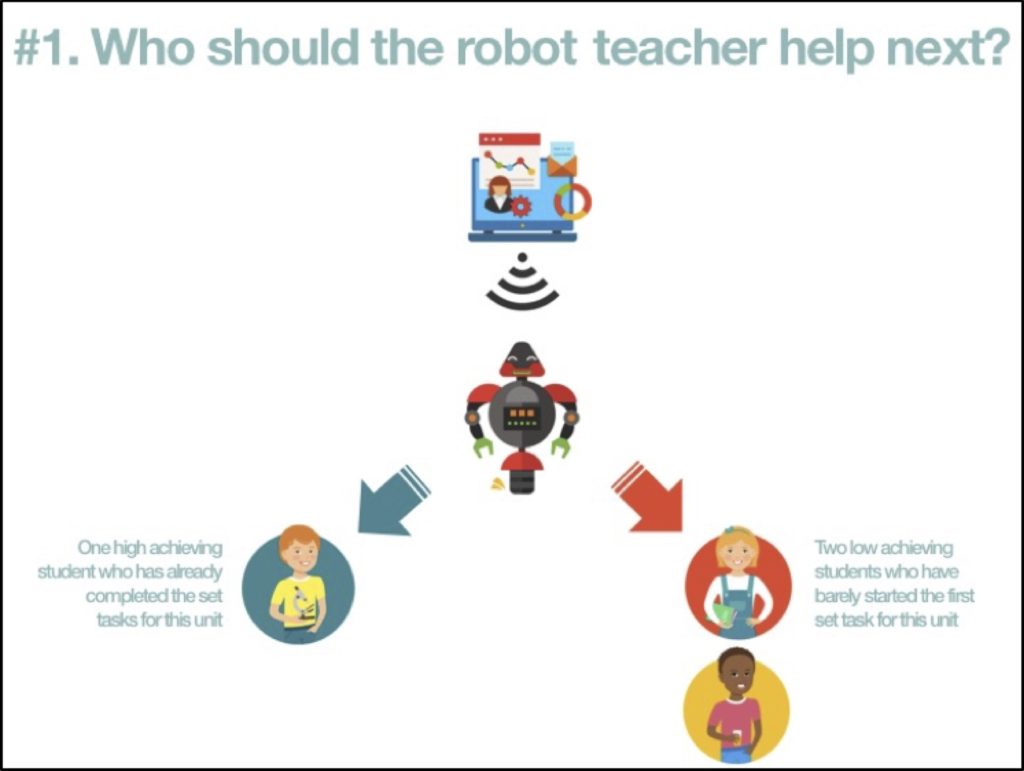

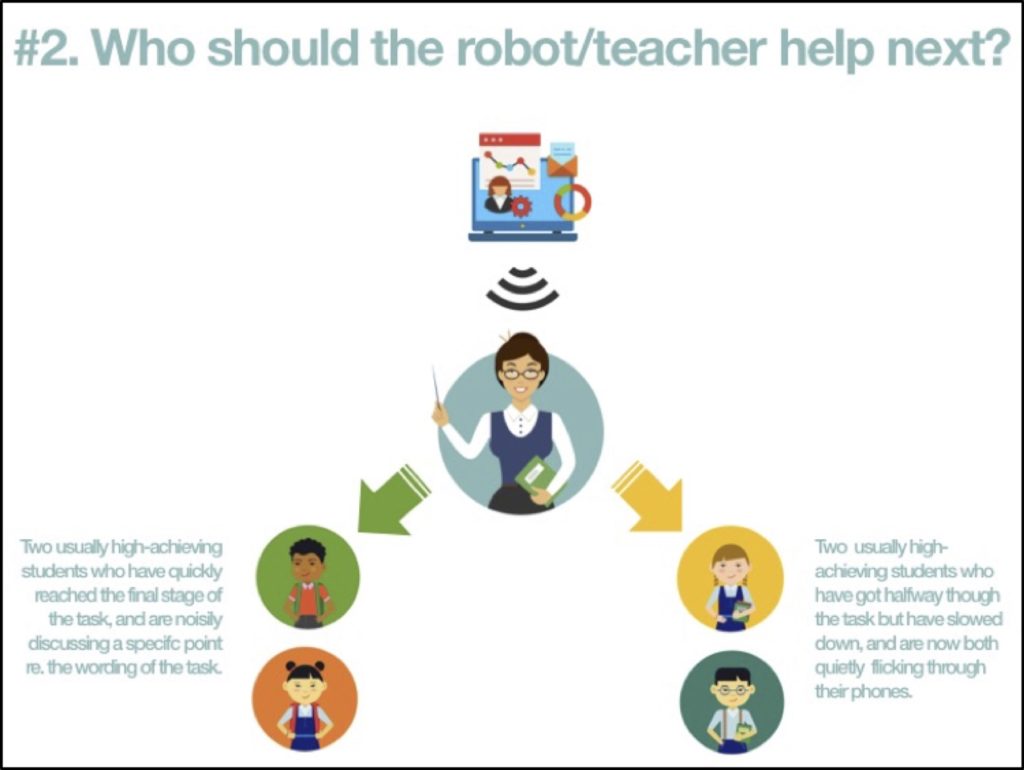

SOFIA: Yeah, and we have issues of responsibility. For example, who is responsible for the robot? We see a lot of companies developing these technologies and selling them. However, where does their responsibility end and where does the teacher’s responsibility begin? And, according to teachers, they want to be the responsible party in terms of what’s going on in the classroom. However, they do feel at the same time that they can’t have this responsibility if they can’t monitor what’s going on. So, the idea with a teaching robot is that it’s supposed to work autonomously, and it’s not supposed to be under the control of teachers. And, often times the teachers don’t know even how it works, right, so they can’t control it.

But one teacher asked how this benefits them … because if they have to walk around and keep an eye on the robot all the time, then what kind of sacrifice is this for their roles as teachers? And, we also have the inevitable fact that robots do break and robots don’t support physical interaction as much as we are led to believe. Because they look humanoid, it is tempting to think you can shake its hand, you can give it a high five, you can give it a hug … but this usually doesn’t work unless the robot is programmed to go along with this.

NEIL: So, this issue of is the robot going along with it leads me to think about questions of deception. If the robot is mimicking certain behaviour, is that an ethical issue as well?

SOFIA: Well, I think it might become an issue if robots have these kind of social interaction features. Of course, there is a level of deception in that, because they’re not social. You can erase the program, you can accidentally erase a log about one child and then the robot won’t remember that child anymore. That’s a big issue that I think we’re going to be seeing a lot more of.

NEIL: So, just backtracking from the ethics for a second, one of the things that spring into mind is why on earth should we be using these machines if there are all these issues. Presumably there are kind of very strong learning and teaching rationales for using robots in the classroom. What sort of things do we know about the learning can take place around a robot?

SOFIA: We have certain indications that robots are preferred by students over virtual agents – for example, intelligent tutoring systems that have a virtual agent with different levels of animation in the agent. So, the more humanlike the embodiment of the robot is, the more physical it is, the better the learning outcomes. However, these are not long-term field studies that I’m talking about here. These findings come from very controlled experiments, often not even with children. And, so we honestly don’t know too much about the learning outcomes. In my study, I had a robot that taught geography and map-reading to children, and also sustainability issues. The learning goal was that the children should be able to reason about sustainability and the economic issues involved, the social issues involved, and it’s a complex interaction. So, we didn’t see any learning outcomes in that regard. In map-reading there was a slight learning improvement, but not as much as one would hope after a month’s worth of interactions with this robot.

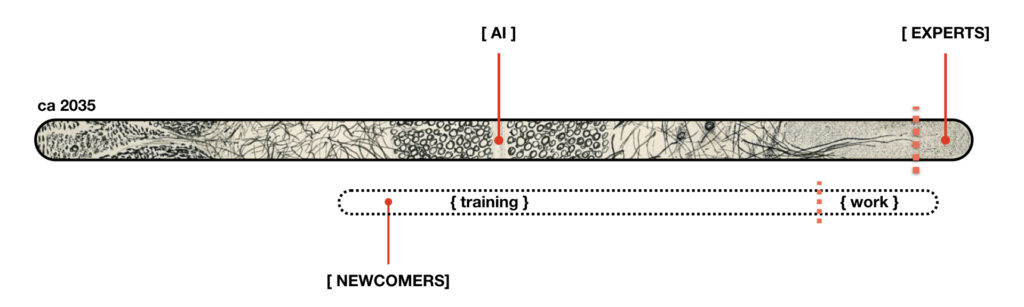

NEIL: So, this is very future focused research, it’s a very future focused area of education, and doing research in this area must be really, really tricky. And also, there’s a lot of hype in this area as well. So, looking forward in the future, what do you realistically think we’ll see in 20 years’ time? And, what is actually hype?

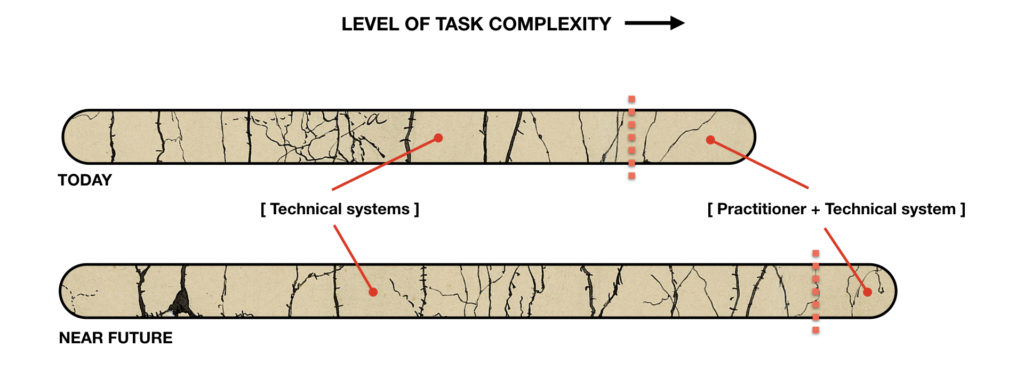

SOFIA: I think as soon as you talk about the social aspects of interaction, then we have a problem. And if we talk about AI as being very clever at certain specific tasks, then yes, we have this already – this is technology that is coming. But, if we talk about a general social intelligence that’s supposed to make its own decisions and deductions based on how you are, and it gets to know you in human terms, and it gets to reason and think, then I’m not sure I believe this is going to happen at all. This would require a very complex form of programming and machine learning, so I guess we’ll see … I’m a bit reluctant to answer that question, because ‘who knows’?

NEIL: Now, I just wanted to finish on a nice easy question. It’s often said that robots and AI actually raise this existential question of ‘what does it mean to be human in a digital age’? I was wondering if your work has led you to any such insights? What will it mean to be human in the 21stcentury? And, what implications might this have for education?

SOFIA: I think we’re going to start to see that there is something else to human nature that technology might not be able to fill. The question is how we want to proceed knowing this. And children are what we define as a vulnerable group in society that we have some sort of duty of care towards. And, if we see all these problems with technology, if we see problems and potential suffering, then maybe we should talk about those issues and not just sweep them under the carpet. I don’t think there’s going to be any revolutionary situation where you see that robots somehow make us question our own sense of being in the world. But, I do think that if we interact with them too much, then we’re going to have problems knowing what we are. So it’s important that we don’t put this technology in the hands of children who are too young to be able to critically assess what’s going on.