The speculations run high on what will be the next global business opportunity. As reported by the World Economic Forum, the futurist Thomas Frey is arguing that the next sector to be disrupted by technological innovation is going to be education. Education is undeniably a massive societal enterprise and whoever can sell a believable product that could be plugged into this activity would find themselves in a favorable financial position.

I’ve been predicting that by 2030 the largest company on the internet is going to be an education-based company that we haven’t heard of yet (Thomas Frey, DaVinci Institute)

The arguments for his predictions come from a belief in a combination of artificial intelligence and personalized learning approaches. While it will surprise no one that AI is currently much hyped, the idea of tailoring instruction to each student’s individual needs, skills, and interests is also gaining considerable traction at the moment.

A report on the effects of personalized learning carried out by the Rand Corporation is pointing to favorable outcomes. The reported findings indicate that compared to their peers, students in schools using personalized learning practices were making greater progress over the course of the two school years studied.

Although results varied considerably from school to school, a majority of the schools had statistically significant positive results. Moreover, after two years in a personalized learning school, students had average mathematics and reading test scores above or near national averages, after having started below national averages. (p. 8)

The authors are however careful to point out that the observed effects were not purely additive over time.

[For] example, the two-year effects were not as large as double the one-year effects (p. 10)

Since they also had problems in separating actual school effects from personalized learning effects they urge readers to be careful in extrapolating from the results.

While our results do seem robust to our various sensitivity analyses, we urge caution regarding interpreting these results as causal. (p. 34)

So how are these kinds of results interpreted by futurists and business leaders? By conjecture and hyperbole, is the short answer. Thomas Frey speculates that these new effective forms of education will enable students to learn at four to ten times the ordinary speed. Others, like Joel Hellermark, promise even greater gains. Hellermark is CEO of Sana Labs, a company specializing in adaptive learning systems.

Adaptive learning systems use computer algorithms to orchestrate the interaction with students so as to continuously provide customized resources. The core idea is to adapt the presentation of educational materials by varying pace and content, according to the specific needs of the individual learner. And those needs are assessed by the system and are based on the students’ previous and current performance. Since Sana Labs use artificial intelligence to run this process, they are a good candidate for the kind of company Frey thinks might grow considerably in the near future. They are currently attracting funding from big investors, but in order to gain the interest of the likes of Mark Zuckerberg and Tim Cook you must be selling a compelling idea. In this regard, the Rand report provides a fertile ground with Hellermark interpreting the results thus:

If you understand how people learn, you can also personalize content to them. And the impact of personalised education is extraordinary. In one study by the Bill and Melinda Gates foundation [ie., the Rand report] students using personalised technique had 50% better learning outcomes in a year. Meaning that over a 12-year period due to compounding these students would learn a hundred times more. Joel Hellermark

However, the size of the gap between interpretations like this, made by business leaders aiming to sell adaptive learning systems to schools and those of the Rand report’s authors themselves, is impressive.

Something also pointed out in the Rand report is the importance of heterogenous classes for learning. This becomes something of a complication for the personalized learning approach espoused by many purveyors of adaptive learning systems that focus entirely on the student as individual learner. But, why should heterogeneity and sociality add to an individual’s learning? A partial answer to this question might lie in the notion of social responsivity (as specifically found in the works of Johan Asplund and in the sociological tradition of Ethnomethodology more generally).

Social responsivity builds on the simple idea that humans are fundamentally social beings who are interconnected by means of communication and other forms social actions. This connectedness is built up as chains of interactions through responses to earlier actions. This is such a fundamental aspect of who we are that if you were to strip a person of their ability to belong in this world—to see and to be seen by others—it can be used as a form of punishment with solitary confinement perhaps the clearest of examples.

Following this line, there is but a small step from the mechanics of adaptive learning systems that function by tracking all the activity of individual learners to the ideas of the English philosopher Jeremy Bentham and his original formulation of the Panopticon, or what he also called the Inspection-House. Way down the list, after institutions such as penitentiaries, factories, mad-houses and hospitals, Bentham ventured to apply his principles of an architecture for inspection to schools also. In his plan, students should work as solitaries under the watchful eye of the master. Here, he wrote:

All play, all chattering – in short, all distraction of every kind, is effectually banished by the central and covered situation of the master, seconded by partitions or screens between the scholars (Bentham, 1787)

Even though he advocated that the idea should be tested for education, he expressed severe qualms about the power one would hand over to whoever would govern such an institution.

Doubts would be started — Whether it would be advisable to apply such constant and unremitting pressure to the tender mind, and to give such herculean and ineludible strength to the gripe of power? (Bentham, 1787)

The central issue and challenge that I would like to raise here is that modern personalized learning approaches typified in adaptive learning systems build, in parallel with the views of Bentham, on the general understanding that traditional whole-class education is defective. And while personalized education picks on traditional teaching for being a blunt instrument, it remains blissfully ignorant of the entire dimension of social responsivity. Therefore, even with the best of intentions, it works to create learning environments built around a-sociality.

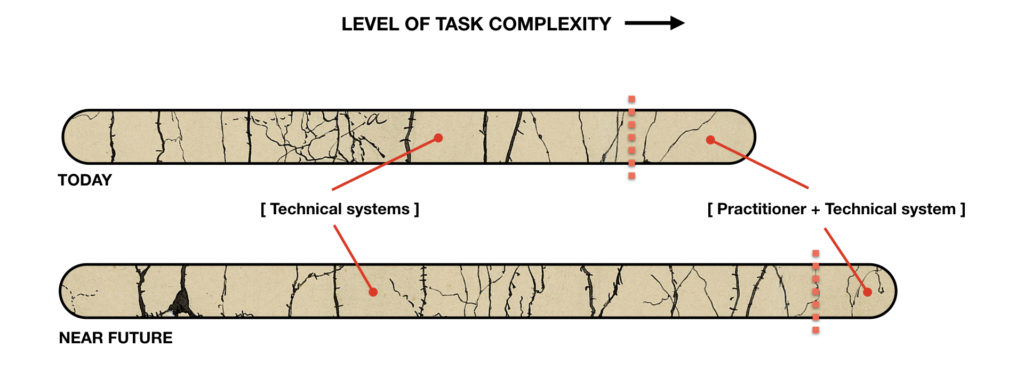

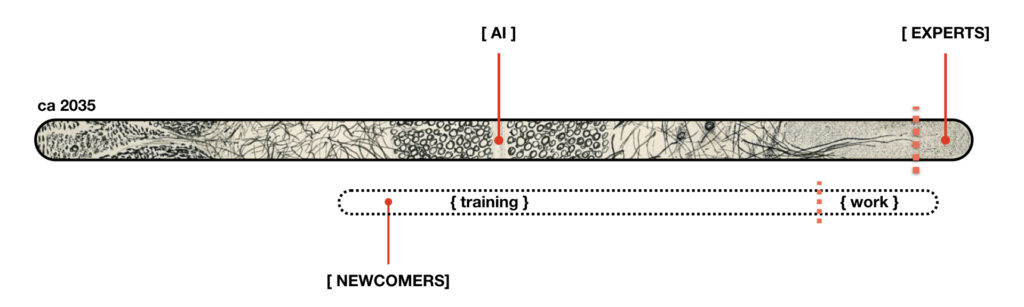

So, what could be done then? We don’t simply want to repeat the past. From Thorndike, via Pressey and Skinner, to the systems we see today, the idea that machines might provide efficient ways of adapting instructions to the needs of students has been aired and tested on and off for over a century. If we now wish to use AI to make a new attempt to better adapt instructions to students we also need to devise additional measures of student progress. What we need are concepts and measures that can incorporate and operate on the level of the group. Because we must acknowledge that human interaction and the social responsivity it supports is a vastly important aspect of how we live and learn.

Like the AI driven adaptive learning systems that are emerging today, the systems I would like to see should make suggestions for what learners should do next, but not only for individual learners. Instead, they should also be able to support teachers in what class activities to offer up next or which students should work together. If we can develop adaptive learning systems that support rather than obstruct social responsivity, then I think they can begin to have a real impact.